Quantifying Skill in Lincoln-Douglas Debate

Sachin Shah

April 22, 2021

Note: this article was written about a month prior to the 2021 Tournament of Champions.

A difficult question for the LD debate community is how a debater’s skill can be measured effectively. This article will explore objective skill estimation concepts to provide some insight to this question. For the purpose of this article, skill will be represented by a numerical value that represents how holistically competitive a debater is at the activity.

I posit that knowing precise skill levels is useful for two specific aspects of debate.

- Community Norms: The LD community places immense value on the perception of a debater’s skill. Round Robin invites, camp hires, hourly rates, and more all seem to be influenced by perceived notions of skill. These notions of skill might contain subjective bias where just a few rounds are used to determine how good a debater is. It would be fairer to have a standard objective method that allows us to specifically quantify a debater’s skill.

- Education: A common practice for debaters is to watch “high-level” rounds to see ideal strategy and execution of various types of positions. When deciding between a few rounds that contain a certain type of debate, one might utilize their perception of skill to understand if the round is a “good” one to watch. Usually, coaches will recommend rounds based on their personal experience; however, this is still a subjective process using limited data and is hard to scale to process lots of rounds. An objective skill metric could be used as a heuristic for judging a round’s quality instead, which removes the personal lens of an individual coach.

Many approximations (or proxies) for skill have been discussed; this article will examine the following five[1]:

Round Data Used for Skill Calculations Each method will use data from the 2020 – 2021 national varsity circuit debate season[4]. This dataset includes 56 tournaments and over 17k rounds. Each round is timestamped and includes the affirmative and negative debater’s school and name as displayed on tabroom.com. Bid information is pulled from NSD Update’s bid list.

Grading Metrics for each Method

In each round, the debater with the higher skill estimate is considered (or called) the predicted winner. We will use two criteria to evaluate the accuracy of various skill estimation methods.

- Predictive Power is a measure of how accurate a model is at predicting round results based on past results. This means we use past data available, at the time of a round occurring, to predict who will win the next round.

- Explanatory Power is a measure of how accurate the model is at explaining why round results occurred. This means the final skill estimations at the end of the season are used to show who should have won any past round according to the model.

The baseline for both predictive and explanatory power is 50%. This represents the “random method” where debater skills are uniform random values. If any of the proposed methods are equal to 50%, the method would be just as good as guessing and thus would not be a useful metric at all. If a proposed method is below 50%, it would have found the opposite of skill.

Method 1: Bid Counts

Naively, one would think the number of bids a debater could be a proxy for skill. Winning multiple bid rounds likely means a debater performs well in pre-eliminations and reaches late eliminations often. Thus, having more bids might correlate with having high skill.

Using pure bid counts, the top 5 placed skilled debaters would be:

- Strake Jesuit JG and Strake Jesuit BE

- Harrison AA

- Harrison GC, Strake ZD, Dulles AS and Harker AR

A problem is immediately noticeable; there are many ties for each bid count level. We can address this issue with a simple modification. Weight bids by how “difficult” they were to earn. Specifically, if K debaters receive bids at a tournament with N competitors, those bids should have weight 1 – (K/N). Equivalently, if one would have to be in the xth percentile of competitors to earn a bid, then the bid has weight x. For example, a finals bid with 50 competitors would have a weight of 1 – (2/50) or 0.96.

This method produces the following top 5 skilled debaters:

- Strake Jesuit JG

- Strake Jesuit BE

- Harrison AA

- Dulles AS

- Strake Jesuit ZD

However, we see another drawback to this method is that it only helps identify the skill level for a small number of debaters. Discussing predictive or explanatory power would not make sense for this method since less than 10% of debaters have a bid, so the vast majority of rounds would be between two 0-skilled debaters. This method is then easily ruled out.

Method 2: Win Rates

The next most obvious skill metric would be using a debater’s win rate: their total number of wins W divided by the number of rounds R they have participated in.

Bonus Stat: The greatest number of rounds anyone competed in during this season so far is 152!

The top 5 skilled debaters would then be[5]:

- Strake Jesuit ZD

- Harvard-Westlake SM

- Harker AR

- Bellarmine EG

- Archbishop Mitty RD

The predictive power is 60% and the explanatory power is 76%. This method shows promise; however, it does contain a drawback: the difficulty of the win does not affect one’s skill level. This method treats every round with the same weight. Beating a “good” debater is worth the same as beating a “bad” debater in this model. In addition, it does not adjust for quantity of rounds won; after winning a single round, one would have maxed out the rating scale at 100% (it’s impossible to have a higher than 100%-win rate). So, this method can provide some help, but is insufficient on its own.

Method 3: Speaker Points

Speaker points might be a good alternative to win rates. Those who win often likely have a higher speaker point average. Additionally, a good aspect of this method is that speaker points are invariant to who won, meaning both debaters can be rewarded for having high skill.

Bonus Stat: The most popular speaker point value assigned during the 2020 – 2021 season was a 29 and the second most popular was a 28.5!

Using this method, the top 5 skilled debaters would then be[6]:

- Bellarmine EG

- Strake Jesuit BE

- Strake Jesuit ZD

- Scarsdale CC

- Exeter AT

The predictive power is 67% and the explanatory power is 73%. This method does better at predicting results than the win rate method; however, it is slightly worse at explaining the results. There are two main concerns with utilizing speaker points. First, speaks are inherently subjective; judges choose their own manner in which to award speaker points. Some judges are “point fairies” while otherwise give out low averages. This could skew one’s rating depending on the judges they happen to get by chance instead of their intrinsic skill. Second, there is no reward for making it to elimination rounds or doing particularly well at a tournament. In fact, only pre-elimination rounds would matter for one’s rating using this method. Because elimination rounds have no effect, it might be difficult to distinguish amongst the top skilled debaters.

Method 4: Elo Ratings

A more rigorous approach for estimating skills would be to employ a rating system such as Elo[7]. This system updates ratings based on the difference between the actual and expected outcome for a given round. Losing against a debater who is rated much higher than you will have a minimal effect while defeating a higher rated debater will have a larger effect.

The top 5 skilled debaters are then:

- Strake Jesuit ZD

- Harker AR

- Harvard-Westlake SM

- Strake Jesuit BE

- Harrison AA

The predictive power is 64% and the explanatory power is 76%. This method is slightly better than the win rate method, which is expected because Elo ratings more rigorously account for round difficulty. One drawback is that some ratings might be inaccurate for debaters who have only participated in a few rounds.

Method 5: Glicko-2 Ratings

The Glicko-2 Rating[8] system was developed to resolve this inaccuracy with the Elo method. The principal idea is that debaters who have only debated a few times have a more “unknown” rating than someone who debates every weekend. This method accounts for this uncertainty in debater ratings when preforming rating updates. A debater’s rating is updated after every weekend of tournaments, and is based on the rounds the debater participated in.

The top 5 skilled debaters are then:

- Strake Jesuit ZD

- Harker AR

- Harvard-Westlake SM

- Dulles AS

- Bellarmine EG

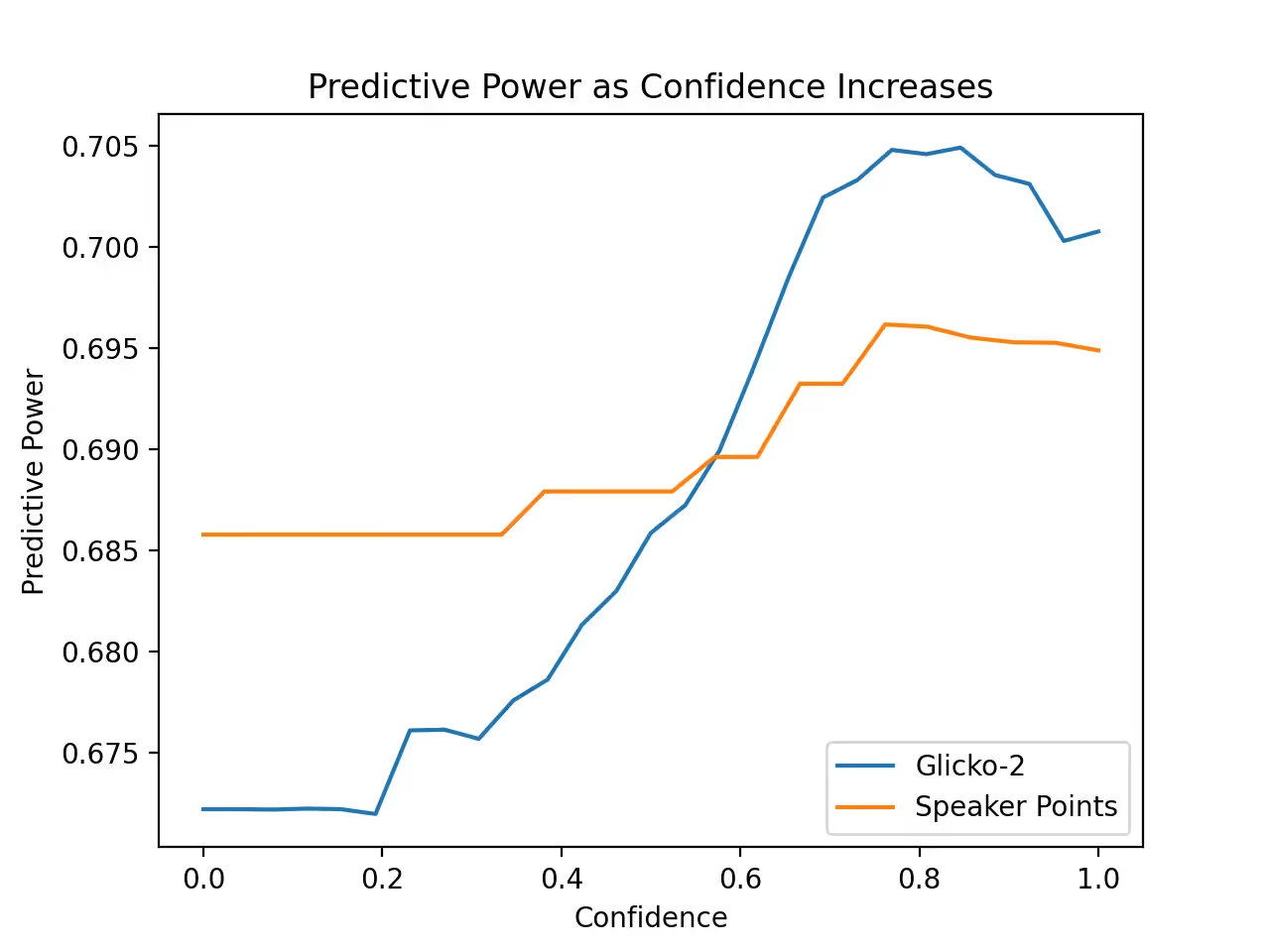

The predictive power is 57% while the explanatory power is 77%. The high explanatory power demonstrates the Glicko-2 Rating system gets closer to the “true” skill of a debater in comparison to previous methods. The primary drawback is that ratings are only updated once every weekend, meaning many rounds occur between “equally” rated debaters and thus the predictive power is low. If we update the ratings after every round, the predictive power becomes 65% and the explanatory power becomes 78%. The predictive power gets better as the system has more rounds as input, and thus becomes more “confident” in debater ratings. For example, the predictive power of the last half of the season is 68%.

One drawback to the Glicko-2 system is that the calculation steps are quite complicated. Rating updates and differences among players are more straightforward in the other systems; however, the Glicko-2 system seems to produce more reliable results.

Discussion

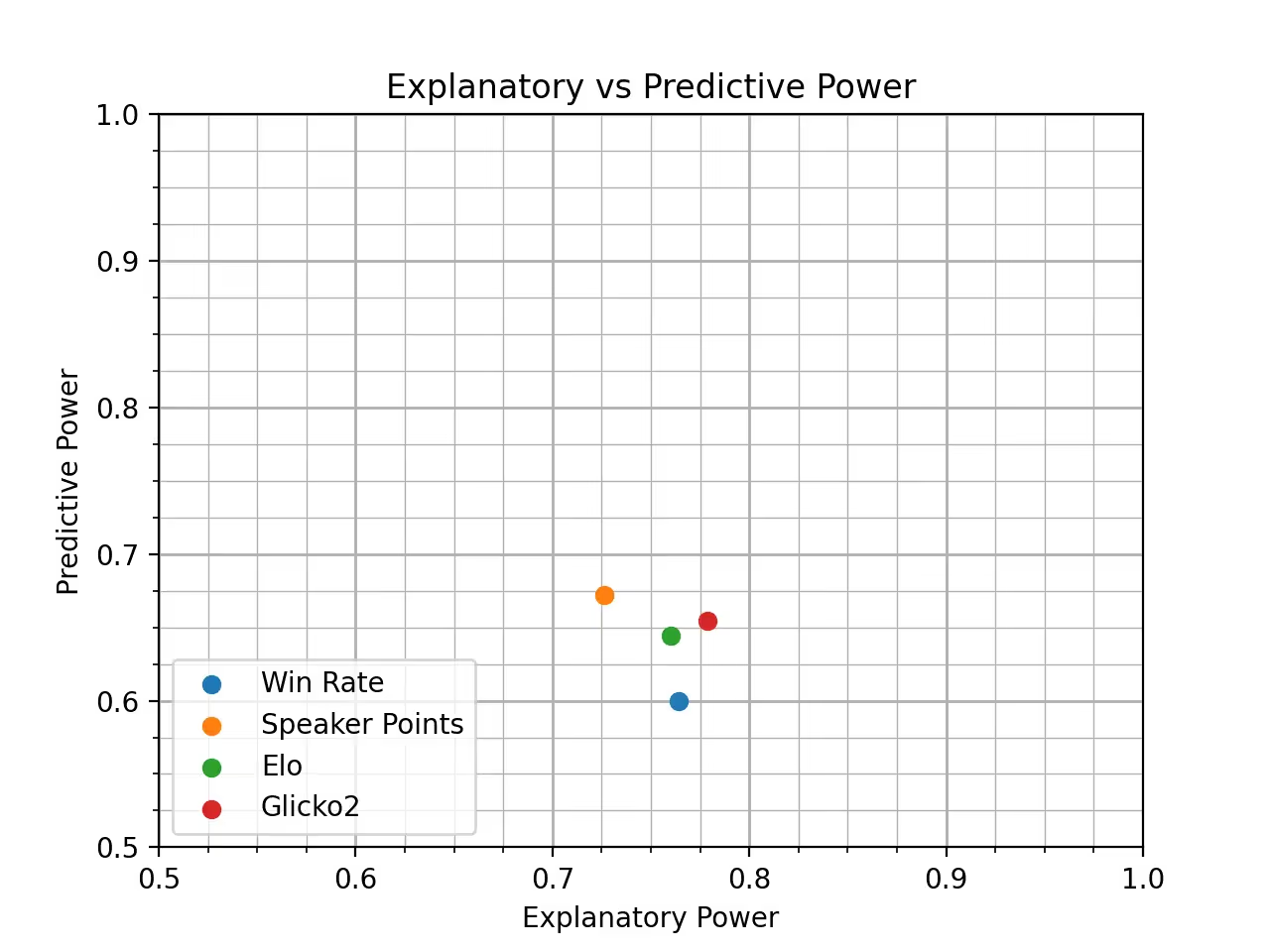

The Glicko-2 Rating system seems to be the best method for skill estimation (Figure 1). It correctly predicts who won past rounds 78% of the time. An 100% accuracy would not be achievable because upsets can and will occur.

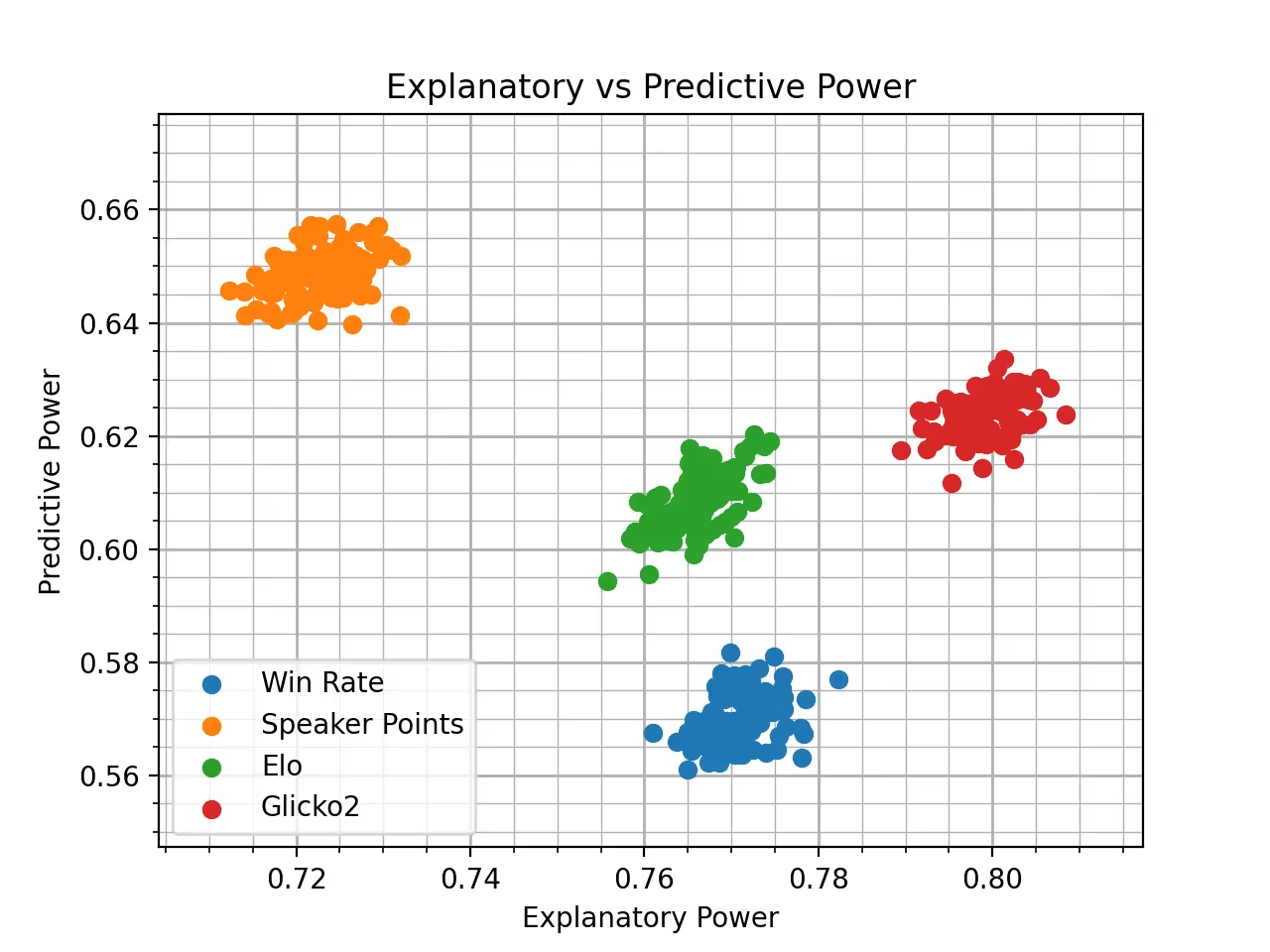

We confirm the difference between these values are significant by running each skill estimation method 100 times on a random 50% subset of the data (Figure 2).

The Glicko-2 system is closest to the upper right-hand corner suggesting it is the best system. Speaker points having a high predictive power and low explanatory power might mean the skill estimation converges fast but inaccurately. This would indicate speaker points is a good skill proxy for new debaters, but when evaluating a debater’s entire career, the inaccuracies are exacerbated. Glicko-2 takes comparatively longer to converge but excels once the confidence is high (Figure 3).

We see that the Glicko-2 system outperforms the Speaker Point system in the long-term suggesting it’s the best system to use for estimating skill for veteran debaters.

Conclusion

This article has explored objective skill metrics. The Glicko-2 Rating systems appears to be the most appropriate for estimating a debater’s skill based on their tournament performance. The Glicko-2 Rating system is well established and used in many settings such as chess.

Future work might look at more complex systems that account for different features of debate. For example, adding rewards for exceptional elimination performance or high speaker points might provide a more robust method for predicting round results. It will be interesting to see how an objective skill metric will be utilized by the community.

-----------------

[1] By no means is this list exhaustive nor the definitive method for ranking debaters. The article does not offer opinions on who the best debaters are, but rather contributes to the conversation of estimating skill based on the data. Using this article and related rankings for the purpose of winning a round would be misrepresenting the intent of the article. The intent is to inform and educate about objective skill metrics.

[2] Elo, Arpad E. The Rating of Chessplayers, Past and Present. Arco Pub., 1986.

[3] Glickman, Mark E. “Example of the Glicko-2 system.” November 30, 2013. http://www.glicko.net/glicko/glicko2.pdf

[4] Shah, Sachin (2021). Lincoln-Douglas Debate Dataset [Data file]. Retrieved from https://github.com/inventshah/LD-data

[5] Debaters must have debated in at least 12 rounds (2 tournaments) in order to appear in this top 5.

[6] Debaters must have at least 12 speaker point values (2 tournaments worth) in order to appear in this top 5.

[7] We use K = 40, and then K = 20 once a debater has competed at least 30 times. This method is briefly discussed in: Shah, Sachin. “A Statistical Analysis of Side-Bias on the 2019 January-February Lincoln-Douglas Debate Topic.” February 16, 2019. NSD Update. http://nsdupdate.com/2019/a-statistical-analysis-of-side-bias-on-the-2019-january-february-lincoln-douglas-debate-topic/

[8] Initial mu = 1500, phi = 350, sigma = 0.06, tau = 1.0.