Are Judges Just Guessing? A Statistical Analysis of LD Elimination Round Panels

Steven Adler

March 30, 2015

Debate judges often bemoan how difficult rounds are to decide: Debaters never seem to do quite enough weighing or issue comparison; evidence is frequently over-tagged; and speakers are often muddled in their delivery, even during important rebuttals. Given these concerns, one might fairly ask – can judges really tell who is winning in a debate round, or are they often grasping at straws?

This question has important implications for our beliefs in the competitive merit of the activity, as well as for possible norms going forward. National circuit LD’s high speeds, dense philosophy, and theoretical complexity are often defended as educational because they allow a more thorough discussion and evaluation of the topic—but what good are these values if even the judge cannot parse them. Meanwhile, debaters generally believe that investing hard work into debate will increase their competitive ability, and that wins in debate rounds are generally awarded on the basis of merit—but a ‘random’ decision process by judges might suggest an upper-bound on how often wins are truly deserved. As such, it is important for LD to consider whether judges can distinguish a round’s true winner.

Before dealing with these potential implications, however, we must first handle the actual data. This question is fundamentally one of statistical independence—whether the ballots cast by judges in elimination rounds can be modeled as separate random draws from a probability distribution, or whether judges agree more often than would be predicted by individual random draws. Thankfully, statistical tests exist that would allow us to test such a hypothesis.

More specifically, one might construct an ‘expected’ distribution of round outcomes (# of 3-0 Affs, 2-1 Affs, 2-1 Negs, 3-0 Negs) if judges were randomly voting over some probability distribution, and then use a Chi-square independence test to determine whether the observed distribution of outcomes is statistically significantly different from what you would see if judges were randomizing. If so, then this could be evidence either that judges can discern a winning debater (if the amount of 3-0 decisions is overrepresented), or that they are particularly bad at discerning a winner (if the amount of 2-1 decisions is overrepresented).

I have gathered data to perform such an analysis: I have recorded the available elimination round data from octas-bid LD tournaments for the 2014-15 season via Tabroom.com, and coded them along three values: whether the Aff or the Neg won; whether the decision was unanimous; and whether the elim took place before quarterfinals, or from quarterfinals on.

In sum, this provided me with a dataset of 216 elimination rounds from the Harvard, Bronx Science, Glenbrooks, Valley, Harvard-Westlake, and St. Mark’s tournaments (with the exception of Bronx Science, I included data starting with the first full elim—so full trips for Harvard, but full doubles for Valley, despite the occurrence of some run-off rounds prior to that). Among these 216 rounds, the Affirmative won 98 of them for a win-rate of 45%. 121 of the 216 rounds were unanimous, for a unanimity-rate of 56%. The percentages of specific decision outcomes are listed in the table below:

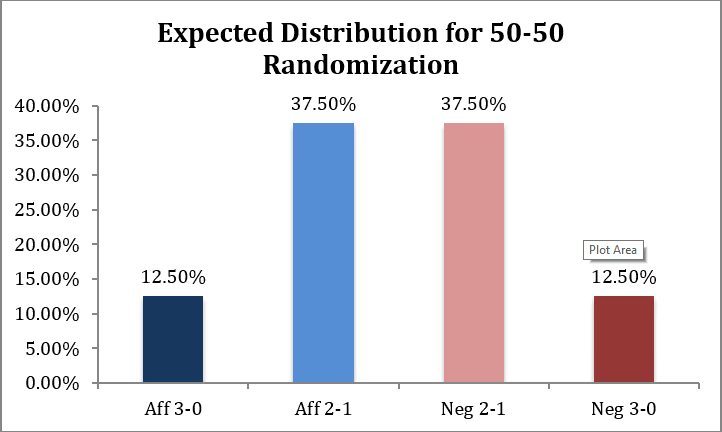

To determine whether these outcomes are consistent with each judge randomizing on their individual ballot, one must construct an ‘expected’ set of observations according to the randomization process. A naïve randomization might suggest that each judge has a 50-50 chance of voting Aff vs. Neg on their individual ballot, and that therefore the distribution would look something like this:

In Lincoln-Douglas, however, there is a strong suspicion that Negatives win more often than 50% of the time, so there is good reason to think that this ‘random’ hit-rate for Neg winning should not be 50%. Indeed, this advantage for Negatives is present in the analysis’s data, so we must adjust the expected randomization rate accordingly (bear with me here, because this will involve a little math).

Using the aggregate data, we can calculate the Neg win-rate for these elimination rounds as 54.7%. To win a round as Neg, you could either win on a 3-0 decision or on a 2-1 decision (with three possible combinations of how this 2-1 could occur, depending on which judge sits). Accordingly, if judges were randomizing their ballots, we would need to find a ‘randomization rate’ for a Negative ballot such that x^3 + 3*x^2*(1-x) = 0.547, which produces an x value of 0.531. (This math is simpler than it seems: About 15% of the time with this randomization rate you would expect a 3-0 for the Negative, and just under 40% of the time it would produce a 2-1 for the Negative, so it combines to the total Neg win-rate of 54.7%.)

So, we now know the rate at which each judge should be ‘randomly’ voting for Neg vs. Aff if it were to construct an overall Neg win-rate of 54.7%, and using that information we can construct what the expected data for the entire set of outcomes would be:

You can tell visually that these distributions are very different from one another. Whereas the expected distribution predicts far more 2-1 decisions than 3-0 decisions, in practice 3-0s are quite prevalent. A 3-0 decision for the Negative is the most likely of any individual outcome, and a 3-0 decision for the Affirmative is nearly as likely as a 2-1 decision in the Aff’s favor. According to these data and the accompanying Chi-test, there is a next-to-zero (p < 0.0001) chance that this distribution could be represented by judges randomizing in the ways described above; this is relatively strong evidence that judges are able to discern winners and that they generally agree with one another on this distinction, or at least more than mere chance would predict.

Yet a plausible objection here might be that maybe the elimination round data need to be further segmented. For instance, perhaps the data do not meet this randomization because judges can easily distinguish between winners and losers in early elimination rounds, which typically contain more-lopsided matchups, but that in late elimination rounds the decision is much murkier. In fact, I find some support for this hypothesis, though it may be an artifact of a smaller sample-size for this segment.

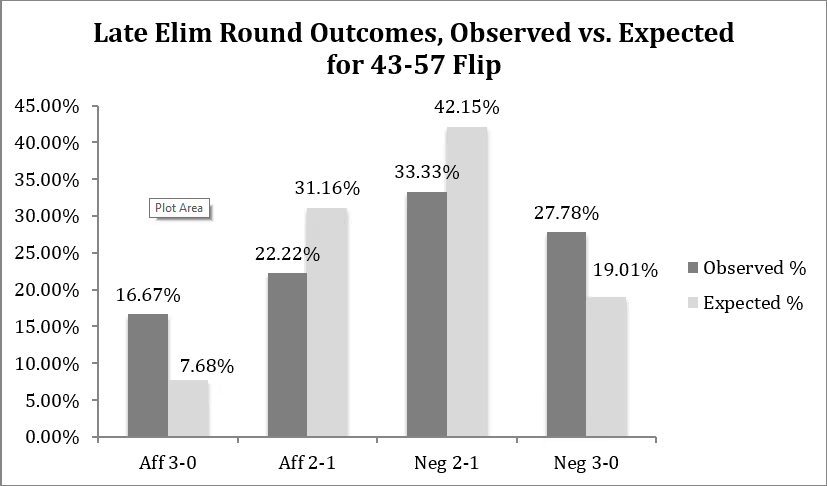

To evaluate this hypothesis, I replicated the above analysis, but pared down to the 36 coded rounds that took place in quarterfinals or later. In these rounds, the Neg side-bias was even more pronounced, with Neg winning 61% of elimination rounds, so the ‘expected’ randomization rate on ballots to achieve such an overall win-rate would be 57% for the Neg and 43% for the Aff. This creates the following expected distribution, compared to the actual observed distribution for these late elimination rounds:

Once again 3-0 decisions seem to be more prevalent than would be expected by randomization data, but the Chi-square test does not come back statistically significant at a p<0.05 level (p=0.077). For this data-set, therefore, judges’ decisions could be modeled by individual random draws from a 43-57 probability distribution, and we cannot establish statistical significance for differentiating the observed from those random draws. According to this p-threshold, we cannot reject the null hypothesis that judges are essentially randomizing their individual decisions in late elimination rounds, though we are very close to doing so.

As noted before, this is certainly not an ironclad finding: The observed p-value of 0.077 is very close to the typical significance threshold of 0.05, and there is certainly reason to think that a larger dataset could reduce uncertainty/variance and thus achieve statistical significance. Additionally, there are other plausible explanations, such as debaters sometimes targeting 2 judges on a panel, or lopsided prefs such that a debater is more likely to only win 2 judges; more complete data would help evaluate these hypotheses. But for the time being, the data are inconclusive about judges’ ability to consistently discern winners in late elims, and that might give the community some pause.

Overall, the national circuit LD community should take relative solace in these findings: Even though judges often gripe about the difficulty of resolving rounds, and it would be easy to fall into believing that most decisions are mere rationalizations, there seems to be something about these decisions that persists across judges, causing 3-0s to be overrepresented. We are likely justified in believing that decisions are well-merited. But even if we were not, debaters could (and should) still continue working on skills that distinguish between them and their competitors—clarity, evidence-comparison, crystallization. Meanwhile, LD might consider structural forms (say, lengthening speeches) to allow debaters to distinguish themselves even further and increase the chance that debaters’ skills can manifest themselves in round—but again, the situation is not critical. For the first time, there is evidence that judges in LD are not merely randomizing in their decisions about for whom to vote in elimination rounds, and this should give us some confidence that the activity has not gone entirely off the rails.